Meet Michelangelo: Uber’s Machine Learning Platform

Jeremy Hermann and Mike Del Balso September 5, 2017

借鉴点:

- 特征的统一管理(在线、离线一致)

- 模型的全生命周期管理(如训练数据)

- 特征、模型的可视化报告

~~~~~ 以下为原文及翻译 ~~~~~

- Motivation behind Michelangelo

- Use case: UberEATS estimated time of delivery model

- System architecture

- Machine learning workflow

- Building on the Michelangelo platform

- Major Updates

Uber Engineering is committed to developing technologies that create seamless, impactful experiences for our customers. We are increasingly investing in artificial intelligence (AI) and machine learning (ML) to fulfill this vision. At Uber, our contribution to this space is Michelangelo, an internal ML-as-a-service platform that democratizes machine learning and makes scaling AI to meet the needs of business as easy as requesting a ride.

优步工程致力于开发技术,为我们的客户创造无缝、有影响力的体验。我们越来越多地投资于人工智能 (AI) 和机器学习 (ML) 以实现这一愿景。在优步,我们对这个领域的贡献是 Michelangelo,这是一个内部 ML 即服务平台,它使机器学习民主化并使扩展 AI 以满足业务需求就像叫车一样简单。

Michelangelo enables internal teams to seamlessly build, deploy, and operate machine learning solutions at Uber’s scale. It is designed to cover the end-to-end ML workflow: manage data, train, evaluate, and deploy models, make predictions, and monitor predictions. The system also supports traditional ML models, time series forecasting, and deep learning.

Michelangelo 使内部团队能够以 Uber 的规模无缝构建、部署和运行机器学习解决方案。它旨在涵盖端到端 ML 工作流程:管理数据、训练、评估和部署模型、进行预测和监控预测。该系统还支持传统的机器学习模型、时间序列预测和深度学习。

Michelangelo has been serving production use cases at Uber for about a year and has become the de-facto system for machine learning for our engineers and data scientists, with dozens of teams building and deploying models. In fact, it is deployed across several Uber data centers, leverages specialized hardware, and serves predictions for the highest loaded online services at the company.

Michelangelo 已经在 Uber 服务生产用例大约一年,并已成为我们工程师和数据科学家的机器学习事实上的系统,有数十个团队构建和部署模型。事实上,它部署在多个 Uber 数据中心,利用专用硬件,并为公司最高负载的在线服务提供预测。

In this article, we introduce Michelangelo, discuss product use cases, and walk through the workflow of this powerful new ML-as-a-service system.

在本文中,我们将介绍 Michelangelo,讨论产品用例,并演练这个强大的新机器学习即服务系统的工作流程。

Motivation behind Michelangelo

Before Michelangelo, we faced a number of challenges with building and deploying machine learning models at Uber related to the size and scale of our operations. While data scientists were using a wide variety of tools to create predictive models (R, scikit-learn, custom algorithms, etc.), separate engineering teams were also building bespoke one-off systems to use these models in production. As a result, the impact of ML at Uber was limited to what a few data scientists and engineers could build in a short time frame with mostly open source tools.

在 Michelangelo 之前,我们在 Uber 构建和部署机器学习模型时面临着许多与我们运营规模和规模相关的挑战。 虽然数据科学家使用各种工具来创建预测模型(R、scikit-learn、自定义算法等),但独立的工程团队也在构建定制的一次性系统以在生产中使用这些模型。 因此,机器学习对 Uber 的影响仅限于少数数据科学家和工程师可以在短时间内使用大部分开源工具构建的内容。

Specifically, there were no systems in place to build reliable, uniform, and reproducible pipelines for creating and managing training and prediction data at scale. Prior to Michelangelo, it was not possible to train models larger than what would fit on data scientists’ desktop machines, and there was neither a standard place to store the results of training experiments nor an easy way to compare one experiment to another. Most importantly, there was no established path to deploying a model into production–in most cases, the relevant engineering team had to create a custom serving container specific to the project at hand. At the same time, we were starting to see signs of many of the ML anti-patterns documented by Scully et al.

具体来说,没有适当的系统来构建可靠、统一和可重复的管道,以大规模创建和管理训练和预测数据。 在米开朗基罗之前,不可能训练出比数据科学家的台式机更大的模型,也没有一个标准的地方来存储训练实验的结果,也没有一种简单的方法可以将一个实验与另一个进行比较。 最重要的是,没有既定的路径将模型部署到生产中——在大多数情况下,相关的工程团队必须创建一个特定于手头项目的自定义服务容器。 与此同时,我们开始看到 Scully 等人记录的许多 ML 反模式的迹象。

Michelangelo is designed to address these gaps by standardizing the workflows and tools across teams though an end-to-end system that enables users across the company to easily build and operate machine learning systems at scale. Our goal was not only to solve these immediate problems, but also create a system that would grow with the business.

Michelangelo 旨在通过端到端系统标准化跨团队的工作流程和工具来解决这些差距,该系统使整个公司的用户能够轻松地大规模构建和操作机器学习系统。 我们的目标不仅是解决这些迫在眉睫的问题,而且还要创建一个可以与业务一起发展的系统。

When we began building Michelangelo in mid 2015, we started by addressing the challenges around scalable model training and deployment to production serving containers. Then, we focused on building better systems for managing and sharing feature pipelines. More recently, the focus shifted to developer productivity–how to speed up the path from idea to first production model and the fast iterations that follow.

当我们在 2015 年年中开始构建 Michelangelo 时,我们首先解决了围绕可扩展模型训练和部署到生产服务容器的挑战。 然后,我们专注于构建更好的系统来管理和共享特征管道。 最近,重点转移到了开发人员的生产力——如何加快从想法到第一个生产模型的过程以及随后的快速迭代。

In the next section, we look at an example application to understand how Michelangelo has been used to build and deploy models to solve specific problems at Uber. While we highlight a specific use case for UberEATS, the platform manages dozens of similar models across the company for a variety of prediction use cases.

在下一节中,我们将查看一个示例应用程序,以了解 Michelangelo 如何用于构建和部署模型以解决 Uber 的特定问题。 虽然我们强调了 UberEATS 的一个特定用例,但该平台管理着整个公司的数十个类似模型,用于各种预测用例。

Use case: UberEATS estimated time of delivery model

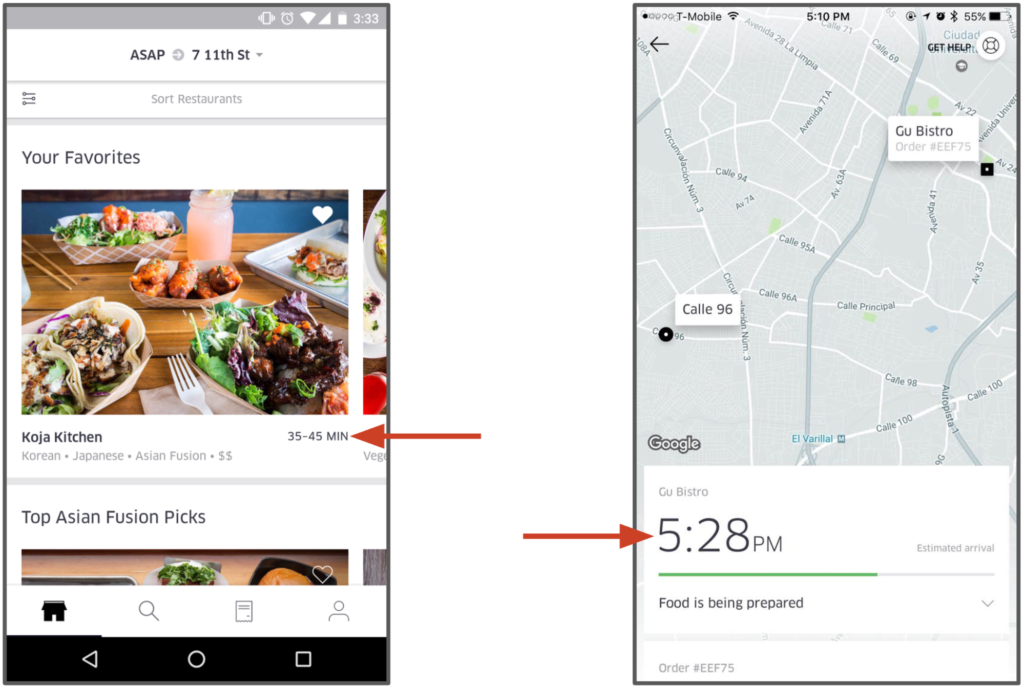

UberEATS has several models running on Michelangelo, covering meal delivery time predictions, search rankings, search autocomplete, and restaurant rankings. The delivery time models predict how much time a meal will take to prepare and deliver before the order is issued and then again at each stage of the delivery process.

UberEATS 在 Michelangelo 上运行了多个模型,涵盖送餐时间预测、搜索排名、搜索自动完成和餐厅排名。 交付时间模型预测在下订单之前准备和交付餐点所需的时间,然后在交付过程的每个阶段再次预测。

Figure 1: The UberEATS app hosts an estimated delivery time feature powered by machine learning models built on Michelangelo.

Figure 1: The UberEATS app hosts an estimated delivery time feature powered by machine learning models built on Michelangelo.

Predicting meal estimated time of delivery (ETD) is not simple. When an UberEATS customer places an order it is sent to the restaurant for processing. The restaurant then needs to acknowledge the order and prepare the meal which will take time depending on the complexity of the order and how busy the restaurant is. When the meal is close to being ready, an Uber delivery-partner is dispatched to pick up the meal. Then, the delivery-partner needs to get to the restaurant, find parking, walk inside to get the food, then walk back to the car, drive to the customer’s location (which depends on route, traffic, and other factors), find parking, and walk to the customer’s door to complete the delivery. The goal is to predict the total duration of this complex multi-stage process, as well as recalculate these time-to-delivery predictions at every step of the process.

预测膳食预计送达时间 (ETD) 并不简单。 当 UberEATS 客户下订单时,订单会被发送到餐厅进行处理。 然后,餐厅需要确认订单并准备餐点,这需要时间,具体取决于订单的复杂程度和餐厅的繁忙程度。 当餐点快准备好时,优步派送员会被派去取餐。 然后,外卖小伙伴需要到餐厅,找到停车位,走进去拿食物,然后走回车上,开车到顾客所在的位置(取决于路线、交通和其他因素),找到停车位 ,并走到客户家门口完成送货。 目标是预测这个复杂的多阶段流程的总持续时间,并在流程的每一步重新计算这些交付时间预测。

On the Michelangelo platform, the UberEATS data scientists use gradient boosted decision tree regression models to predict this end-to-end delivery time. Features for the model include information from the request (e.g., time of day, delivery location), historical features (e.g. average meal prep time for the last seven days), and near-realtime calculated features (e.g., average meal prep time for the last one hour). Models are deployed across Uber’s data centers to Michelangelo model serving containers and are invoked via network requests by the UberEATS microservices. These predictions are displayed to UberEATS customers prior to ordering from a restaurant and as their meal is being prepared and delivered.

在 Michelangelo 平台上,UberEATS 数据科学家使用梯度提升决策树回归模型来预测这种端到端的交付时间。 该模型的特征包括来自请求的信息(例如,一天中的时间、交付地点)、历史特征(例如过去 7 天的平均膳食准备时间)和近实时计算的特征(例如,平均膳食准备时间) 最后一小时)。 模型跨 Uber 的数据中心部署到 Michelangelo 模型服务容器,并由 UberEATS 微服务通过网络请求调用。 这些预测会在 UberEATS 客户从餐厅订购之前以及在准备和交付餐点时显示给他们。

System architecture

Michelangelo consists of a mix of open source systems and components built in-house. The primary open sourced components used are HDFS, Spark, Samza, Cassandra, MLLib, XGBoost, and TensorFlow. We generally prefer to use mature open source options where possible, and will fork, customize, and contribute back as needed, though we sometimes build systems ourselves when open source solutions are not ideal for our use case.

Michelangelo 由开源系统和内部构建的组件组成。 使用的主要开源组件是 HDFS、Spark、Samza、Cassandra、MLLib、XGBoost 和 TensorFlow。 我们通常更喜欢在可能的情况下使用成熟的开源选项,并会根据需要分叉、定制和回馈,尽管有时我们会在开源解决方案不适合我们的用例时自己构建系统。

Michelangelo is built on top of Uber’s data and compute infrastructure, providing a data lake that stores all of Uber’s transactional and logged data, Kafka brokers that aggregate logged messages from all Uber’s services, a Samza streaming compute engine, managed Cassandra clusters, and Uber’s in-house service provisioning and deployment tools.

Michelangelo 建立在 Uber 的数据和计算基础设施之上,提供了一个数据湖来存储 Uber 的所有交易和记录数据、聚合来自所有 Uber 服务的记录消息的 Kafka 代理、一个 Samza 流计算引擎、托管的 Cassandra 集群,以及 Uber 的 -house 服务供应和部署工具。

In the next section, we walk through the layers of the system using the UberEATS ETD models as a case study to illustrate the technical details of Michelangelo.

在下一节中,我们将使用 UberEATS ETD 模型作为案例研究来介绍系统的各个层,以说明 Michelangelo 的技术细节。

Machine learning workflow

The same general workflow exists across almost all machine learning use cases at Uber regardless of the challenge at hand, including classification and regression, as well as time series forecasting. The workflow is generally implementation-agnostic, so easily expanded to support new algorithm types and frameworks, such as newer deep learning frameworks. It also applies across different deployment modes such as both online and offline (and in-car and in-phone) prediction use cases.

无论手头面临何种挑战,包括分类和回归以及时间序列预测,Uber 的几乎所有机器学习用例都存在相同的通用工作流程。 工作流通常与实现无关,因此很容易扩展以支持新的算法类型和框架,例如较新的深度学习框架。 它还适用于不同的部署模式,例如在线和离线(以及车内和电话)预测用例。

We designed Michelangelo specifically to provide scalable, reliable, reproducible, easy-to-use, and automated tools to address the following six-step workflow:

- Manage data

- Train models

- Evaluate models

- Deploy models

- Make predictions

- Monitor predictions

我们专门设计 Michelangelo 以提供可扩展、可靠、可重复、易于使用和自动化的工具,以解决以下六步工作流程:

- 管理数据

- 训练模型

- 评估模型

- 部署模型

- 作出预测

- 监控预测

Next, we go into detail about how Michelangelo’s architecture facilitates each stage of this workflow.

接下来,我们将详细介绍米开朗基罗的架构如何促进此工作流程的每个阶段。

Manage data

Finding good features is often the hardest part of machine learning and we have found that building and managing data pipelines is typically one of the most costly pieces of a complete machine learning solution.

找到好的特征通常是机器学习中最困难的部分,我们发现构建和管理数据管道通常是完整机器学习解决方案中成本最高的部分之一。

A platform should provide standard tools for building data pipelines to generate feature and label data sets for training (and re-training) and feature-only data sets for predicting. These tools should have deep integration with the company’s data lake or warehouses and with the company’s online data serving systems. The pipelines need to be scalable and performant, incorporate integrated monitoring for data flow and data quality, and support both online and offline training and predicting. Ideally, they should also generate the features in a way that is shareable across teams to reduce duplicate work and increase data quality. They should also provide strong guard rails and controls to encourage and empower users to adopt best practices (e.g., making it easy to guarantee that the same data generation/preparation process is used at both training time and prediction time).

平台应提供用于构建数据管道的标准工具,以生成用于训练(和重新训练)的特征和标签数据集以及用于预测的仅特征数据集。 这些工具应该与公司的数据湖或仓库以及公司的在线数据服务系统深度集成。 管道需要具有可扩展性和高性能,包含对数据流和数据质量的集成监控,并支持在线和离线培训和预测。 理想情况下,他们还应该以可跨团队共享的方式生成特征,以减少重复工作并提高数据质量。 他们还应该提供强大的防护栏和控制措施,以鼓励和授权用户采用最佳实践(例如,轻松保证在训练时间和预测时间使用相同的数据生成/准备过程)。

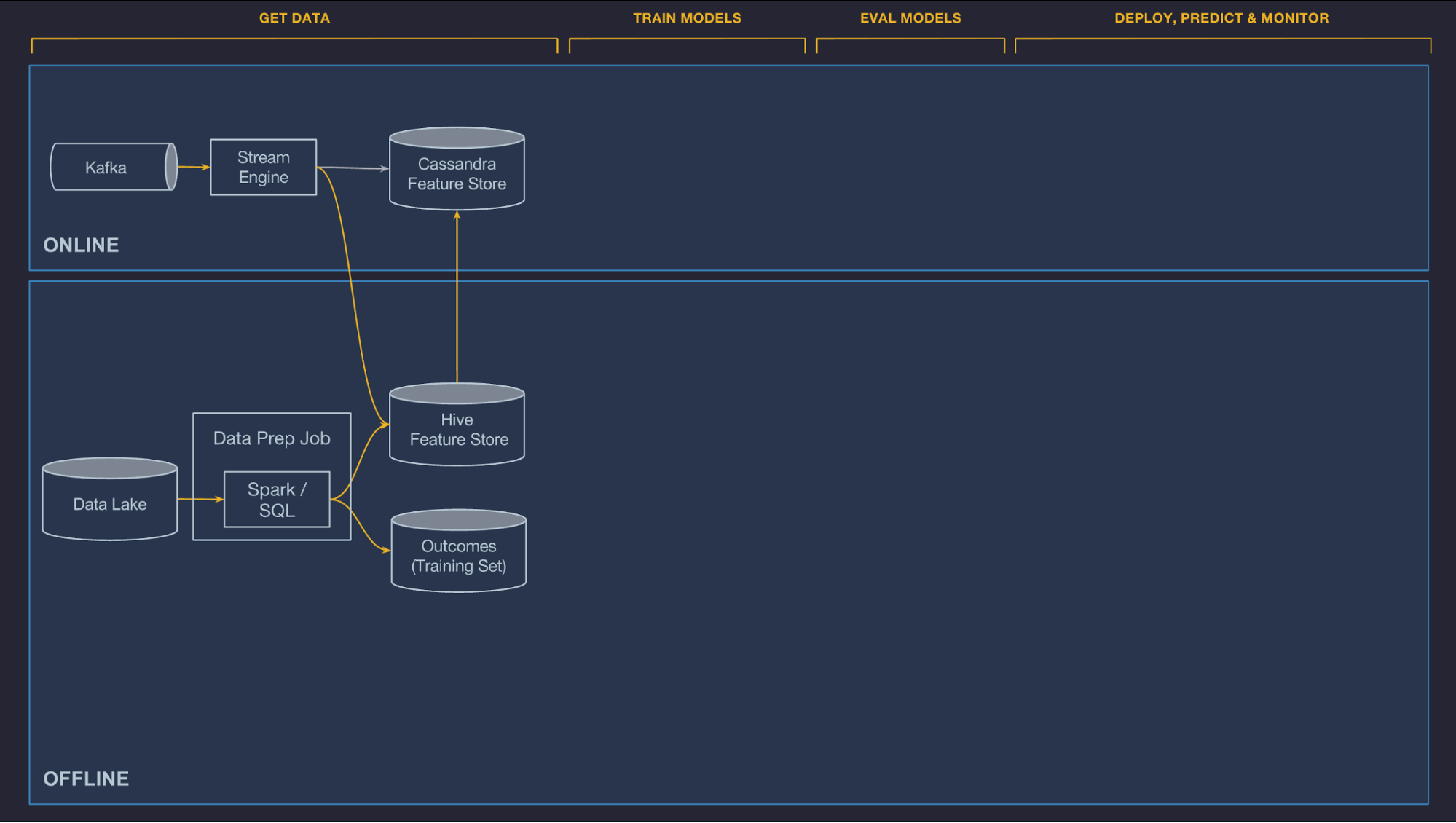

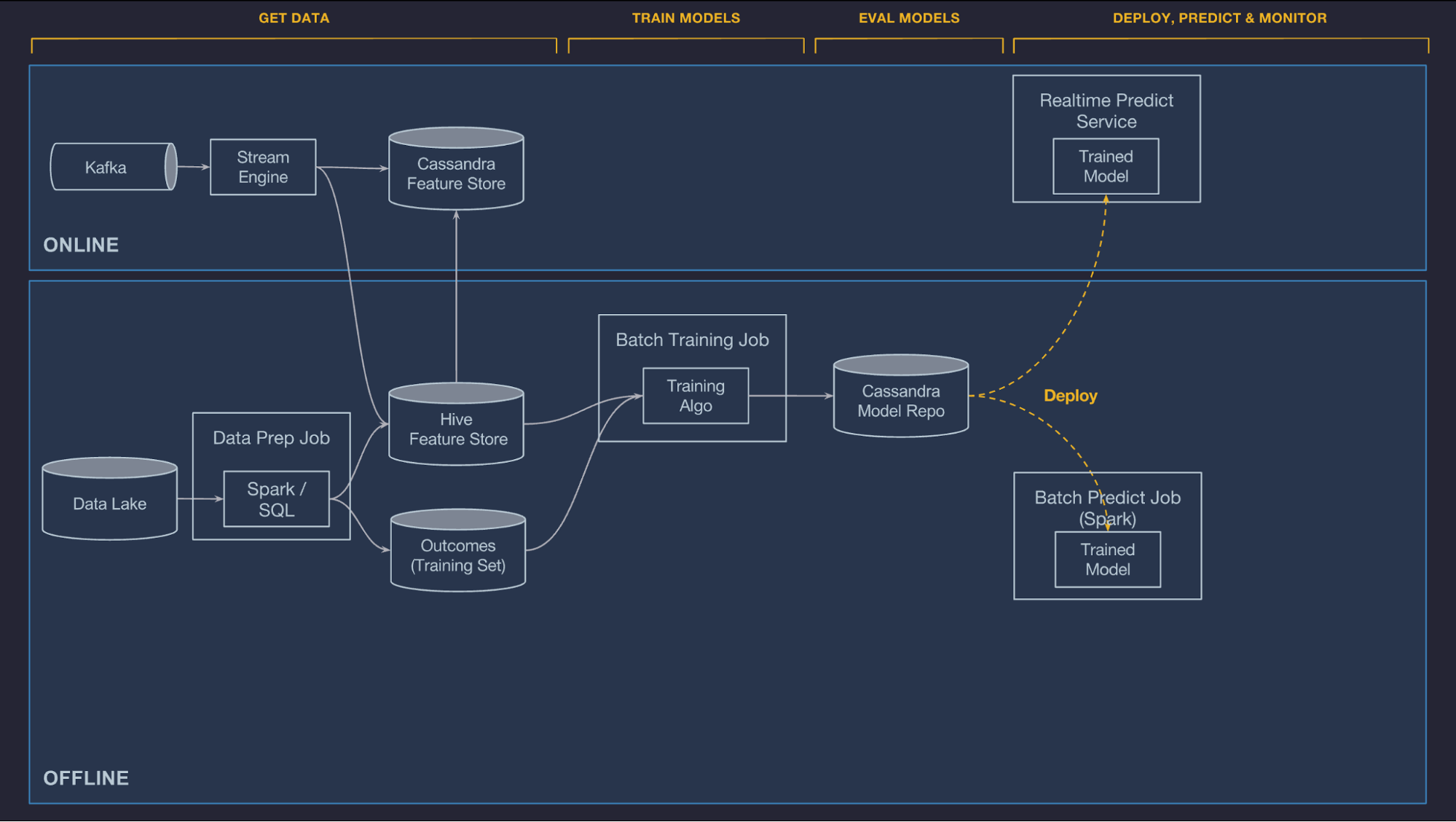

The data management components of Michelangelo are divided between online and offline pipelines. Currently, the offline pipelines are used to feed batch model training and batch prediction jobs and the online pipelines feed online, low latency predictions (and in the near future, online learning systems).

Michelangelo 的数据管理组件分为在线和离线管道。 目前,离线管道用于提供批量模型训练和批量预测作业,而在线管道用于提供在线低延迟预测(以及在不久的将来,在线学习系统)。

In addition, we added a layer of data management, a feature store that allows teams to share, discover, and use a highly curated set of features for their machine learning problems. We found that many modeling problems at Uber use identical or similar features, and there is substantial value in enabling teams to share features between their own projects and for teams in different organizations to share features with each other.

此外,我们添加了一个数据管理层,一个特征存储,允许团队共享、发现和使用一组高度策划的特征来解决他们的机器学习问题。 我们发现 Uber 的许多建模问题都使用相同或相似的特征,让团队在自己的项目之间共享特征以及让不同组织的团队相互共享特征具有重大价值。

Figure 2: Data preparation pipelines push data into the Feature Store tables and training data repositories.

Figure 2: Data preparation pipelines push data into the Feature Store tables and training data repositories.

Offline

Uber’s transactional and log data flows into an HDFS data lake and is easily accessible via Spark and Hive SQL compute jobs. We provide containers and scheduling to run regular jobs to compute features which can be made private to a project or published to the Feature Store (see below) and shared across teams, while batch jobs run on a schedule or a trigger and are integrated with data quality monitoring tools to quickly detect regressions in the pipeline–either due to local or upstream code or data issues.

优步的事务和日志数据流入 HDFS 数据湖,可通过 Spark 和 Hive SQL 计算作业轻松访问。 我们提供容器和调度来运行常规作业来计算特征,这些特征可以为项目私有或发布到特征存储(见下文)并在团队之间共享,而批处理作业按计划或触发器运行并与数据集成 质量监控工具,用于快速检测管道中的回归——无论是由于本地或上游代码或数据问题。

Online

Models that are deployed online cannot access data stored in HDFS, and it is often difficult to compute some features in a performant manner directly from the online databases that back Uber’s production services (for instance, it is not possible to directly query the UberEATS order service to compute the average meal prep time for a restaurant over a specific period of time). Instead, we allow features needed for online models to be precomputed and stored in Cassandra where they can be read at low latency at prediction time.

在线部署的模型无法访问存储在 HDFS 中的数据,并且通常很难直接从支持 Uber 生产服务的在线数据库中以高性能方式计算某些特征(例如,无法直接查询 UberEATS 订单服务计算特定时间段内餐厅的平均膳食准备时间)。相反,我们允许预先计算在线模型所需的特征并将其存储在 Cassandra 中,在那里可以在预测时以低延迟读取它们。

We support two options for computing these online-served features, batch precompute and near-real-time compute, outlined below:

- Batch precompute. The first option for computing is to conduct bulk precomputing and loading historical features from HDFS into Cassandra on a regular basis. This is simple and efficient, and generally works well for historical features where it is acceptable for the features to only be updated every few hours or once a day. This system guarantees that the same data and batch pipeline is used for both training and serving. UberEATS uses this system for features like a ‘restaurant’s average meal preparation time over the last seven days.’

- Near-real-time compute. The second option is to publish relevant metrics to Kafka and then run Samza-based streaming compute jobs to generate aggregate features at low latency. These features are then written directly to Cassandra for serving and logged back to HDFS for future training jobs. Like the batch system, near-real-time compute ensures that the same data is used for training and serving. To avoid a cold start, we provide a tool to “backfill” this data and generate training data by running a batch job against historical logs. UberEATS uses this near-realtime pipeline for features like a ‘restaurant’s average meal preparation time over the last one hour.’

我们支持两种计算这些在线服务特征的选项,批量预计算和近实时计算,概述如下:

- 批量预计算。计算的第一个选择是进行批量预计算,并定期将 HDFS 中的历史特征加载到 Cassandra 中。这既简单又高效,并且通常适用于历史特征,其中特征仅每隔几小时或一天更新一次是可以接受的。该系统保证相同的数据和批处理管道用于训练和服务。 UberEATS 将该系统用于“餐厅过去 7 天平均膳食准备时间”等特征。

- 近实时计算。第二种选择是将相关指标发布到 Kafka,然后运行基于 Samza 的流计算作业以低延迟生成聚合特征。然后将这些特征直接写入 Cassandra 以供服务并记录回 HDFS 以供将来的训练作业。与批处理系统一样,近实时计算可确保将相同的数据用于训练和服务。为了避免冷启动,我们提供了一个工具来“回填”这些数据,并通过针对历史日志运行批处理作业来生成训练数据。 UberEATS 将这种近实时管道用于诸如“餐厅过去一小时平均膳食准备时间”之类的特征。

Shared feature store

We found great value in building a centralized Feature Store in which teams around Uber can create and manage canonical features to be used by their teams and shared with others. At a high level, it accomplishes two things:

- It allows users to easily add features they have built into a shared feature store, requiring only a small amount of extra metadata (owner, description, SLA, etc.) on top of what would be required for a feature generated for private, project-specific usage.

- Once features are in the Feature Store, they are very easy to consume, both online and offline, by referencing a feature’s simple canonical name in the model configuration. Equipped with this information, the system handles joining in the correct HDFS data sets for model training or batch prediction and fetching the right value from Cassandra for online predictions.

我们发现构建集中式特征存储非常有价值,优步周围的团队可以在其中创建和管理供其团队使用并与他人共享的规范特征。在高层次上,它完成了两件事:

- 它允许用户轻松地将他们内置的特征添加到共享特征存储中,在为私有、项目生成的特征所需的基础上只需要少量额外的元数据(所有者、描述、SLA 等)。具体用法。

- 一旦特征在特征存储中,通过在模型配置中引用特征的简单规范名称,它们很容易在线和离线使用。配备这些信息后,系统会处理加入正确的 HDFS 数据集以进行模型训练或批量预测,并从 Cassandra 获取正确的值以进行在线预测。

At the moment, we have approximately 10,000 features in Feature Store that are used to accelerate machine learning projects, and teams across the company are adding new ones all the time. Features in the Feature Store are automatically calculated and updated daily.

目前,我们在 Feature Store 中有大约 10,000个特征用于加速机器学习项目,整个公司的团队一直在添加新特征。 Feature Store 中的特征每天都会自动计算和更新。

In the future, we intend to explore the possibility of building an automated system to search through Feature Store and identify the most useful and important features for solving a given prediction problem.

未来,我们打算探索构建自动化系统来搜索特征存储并确定最有用和最重要的特征以解决给定预测问题的可能性。

Domain specific language for feature selection and transformation

Often the features generated by data pipelines or sent from a client service are not in the proper format for the model, and they may be missing values that need to be filled. Moreover, the model may only need a subset of features provided. In some cases, it may be more useful for the model to transform a timestamp into an hour-of-day or day-of-week to better capture seasonal patterns. In other cases, feature values may need to be normalized (e.g., subtract the mean and divide by standard deviation).

通常,由数据管道生成或从客户端服务发送的特征的格式不适合模型,它们可能是需要填充的缺失值。此外,模型可能只需要提供一个特征子集。在某些情况下,模型将时间戳转换为一天中的一小时或一周中的某一天以更好地捕捉季节性模式可能更有用。在其他情况下,可能需要对特征值进行归一化(例如,减去均值并除以标准差)。

To address these issues, we created a DSL (domain specific language) that modelers use to select, transform, and combine the features that are sent to the model at training and prediction times. The DSL is implemented as sub-set of Scala. It is a pure functional language with a complete set of commonly used functions. With this DSL, we also provide the ability for customer teams to add their own user-defined functions. There are accessor functions that fetch feature values from the current context (data pipeline in the case of an offline model or current request from client in the case of an online model) or from the Feature Store.

为了解决这些问题,我们创建了一个 DSL(领域特定语言),建模人员使用它来选择、转换和组合在训练和预测时发送到模型的特征。 DSL 是作为 Scala 的子集实现的。它是一门纯函数式语言,拥有一套完整的常用函数。通过这个 DSL,我们还为客户团队提供了添加他们自己的用户定义函数的能力。有一些访问器函数可以从当前上下文(离线模型中的数据管道或在线模型中来自客户端的当前请求)或特征存储中获取特征值。

It is important to note that the DSL expressions are part of the model configuration and the same expressions are applied at training time and at prediction time to help guarantee that the same final set of features is generated and sent to the model in both cases.

需要注意的是,DSL 表达式是模型配置的一部分,并且在训练时和预测时应用相同的表达式,以帮助确保在两种情况下生成相同的最终特征集并将其发送到模型。

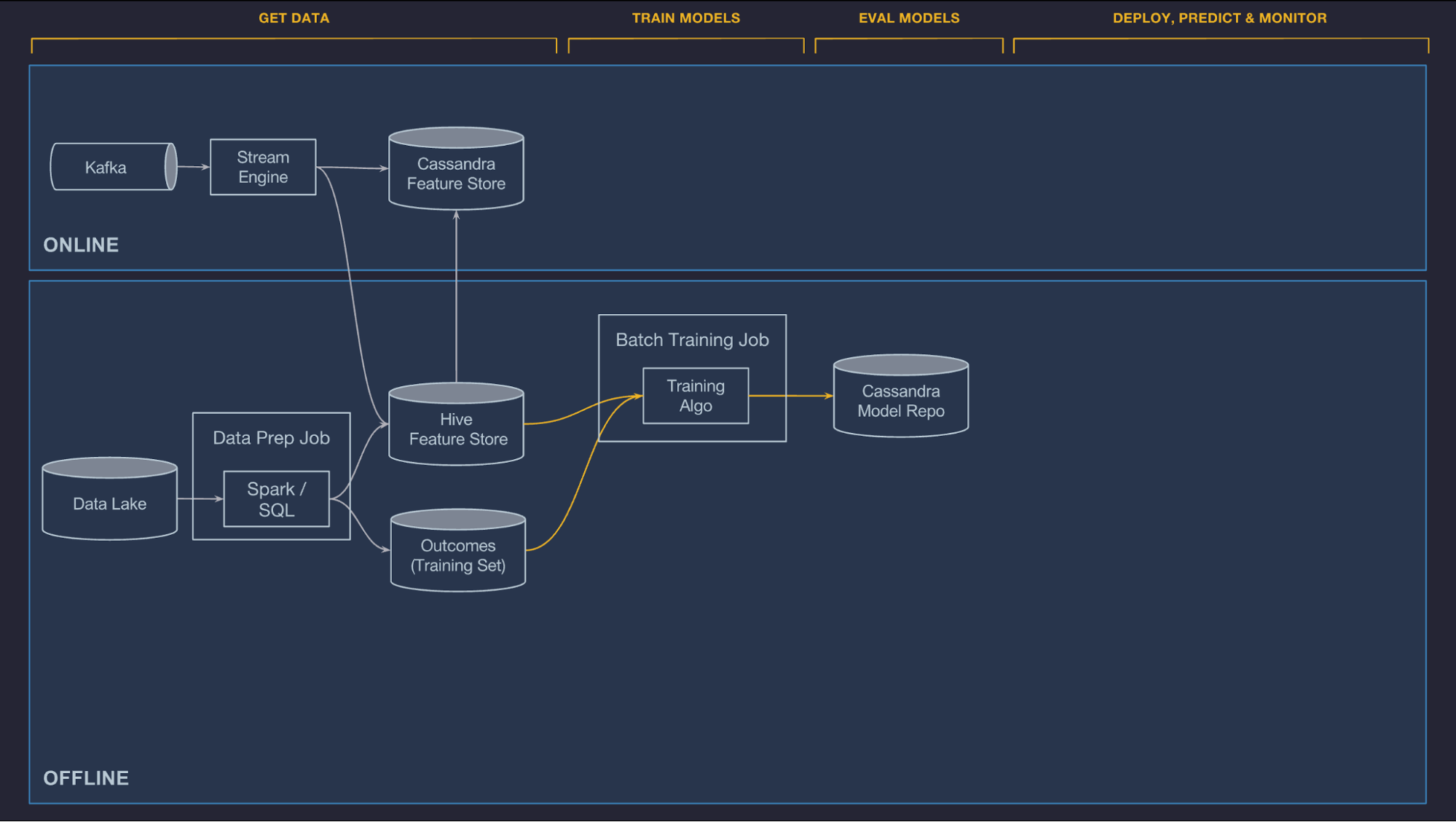

Train models

We currently support offline, large-scale distributed training of decision trees, linear and logistic models, unsupervised models (k-means), time series models, and deep neural networks. We regularly add new algorithms in response to customer need and as they are developed by Uber’s AI Labs and other internal researchers. In addition, we let customer teams add their own model types by providing custom training, evaluation, and serving code. The distributed model training system scales up to handle billions of samples and down to small datasets for quick iterations.

我们目前支持离线、大规模分布式决策树、线性和逻辑模型、无监督模型(k-means)、时间序列模型和深度神经网络的训练。我们定期添加新算法以响应客户需求,因为它们是由 Uber 的 AI 实验室和其他内部研究人员开发的。此外,我们通过提供自定义培训、评估和服务代码,让客户团队添加自己的模型类型。分布式模型训练系统向上扩展以处理数十亿个样本,向下扩展到小数据集以进行快速迭代。

A model configuration specifies the model type, hyper-parameters, data source reference, and feature DSL expressions, as well as compute resource requirements (the number of machines, how much memory, whether or not to use GPUs, etc.). It is used to configure the training job, which is run on a YARN or Mesos cluster.

模型配置指定模型类型、超参数、数据源引用和特征 DSL 表达式,以及计算资源要求(机器数量、内存多少、是否使用 GPU 等)。它用于配置在 YARN 或 Mesos 集群上运行的训练作业。

After the model is trained, performance metrics (e.g., ROC curve and PR curve) are computed and combined into a model evaluation report. At the end of training, the original configuration, the learned parameters, and the evaluation report are saved back to our model repository for analysis and deployment.

模型训练完成后,计算性能指标(例如 ROC 曲线和 PR 曲线)并组合成模型评估报告。在训练结束时,将原始配置、学习参数和评估报告保存回我们的模型存储库进行分析和部署。

In addition to training single models, Michelangelo supports hyper-parameter search for all model types as well as partitioned models. With partitioned models, we automatically partition the training data based on configuration from the user and then train one model per partition, falling back to a parent model when needed (e.g. training one model per city and falling back to a country-level model when an accurate city-level model cannot be achieved).

除了训练单个模型外,Michelangelo 还支持所有模型类型以及分区模型的超参数搜索。使用分区模型,我们根据用户的配置自动对训练数据进行分区,然后每个分区训练一个模型,在需要时回退到父模型(例如,每个城市训练一个模型,当需要时回退到国家级模型)无法实现准确的城市级模型)。

Training jobs can be configured and managed through a web UI or an API, often via Jupyter notebook. Many teams use the API and workflow tools to schedule regular re-training of their models.

可以通过 Web UI 或 API(通常通过 Jupyter notebook)配置和管理训练作业。许多团队使用 API 和工作流工具来安排对其模型的定期重新训练。

Figure 3: Model training jobs use Feature Store and training data repository data sets to train models and then push them to the model repository.

Figure 3: Model training jobs use Feature Store and training data repository data sets to train models and then push them to the model repository.

Evaluate models

Models are often trained as part of a methodical exploration process to identify the set of features, algorithms, and hyper-parameters that create the best model for their problem. Before arriving at the ideal model for a given use case, it is not uncommon to train hundreds of models that do not make the cut. Though not ultimately used in production, the performance of these models guide engineers towards the model configuration that results in the best model performance. Keeping track of these trained models (e.g. who trained them and when, on what data set, with which hyper-parameters, etc.), evaluating them, and comparing them to each other are typically big challenges when dealing with so many models and present opportunities for the platform to add a lot of value.

模型通常作为有条理的探索过程的一部分进行训练,以识别为他们的问题创建最佳模型的一组特征、算法和超参数。在为给定用例找到理想模型之前,训练数百个未达到要求的模型并不少见。尽管最终并未用于生产,但这些模型的性能会引导工程师进行模型配置,从而实现最佳模型性能。跟踪这些经过训练的模型(例如,谁训练它们以及何时、在什么数据集上、使用哪些超参数等)、评估它们并将它们相互比较,在处理如此多的模型时通常是一个巨大的挑战。平台有机会增加很多价值。

For every model that is trained in Michelangelo, we store a versioned object in our model repository in Cassandra that contains a record of:

- Who trained the model

- Start and end time of the training job

- Full model configuration (features used, hyper-parameter values, etc.)

- Reference to training and test data sets

- Distribution and relative importance of each feature

- Model accuracy metrics

- Standard charts and graphs for each model type (e.g. ROC curve, PR curve, and confusion matrix for a binary classifier)

- Full learned parameters of the model

- Summary statistics for model visualization

The information is easily available to the user through a web UI and programmatically through an API, both for inspecting the details of an individual model and for comparing one or more models with each other.

对于在 Michelangelo 中训练的每个模型,我们在 Cassandra 的模型存储库中存储一个版本化对象,其中包含以下记录:

- 谁训练了模型

- 训练作业的开始和结束时间

- 完整的模型配置(使用的特征、超参数值等)

- 参考训练和测试数据集

- 每个特征的分布和相对重要性

- 模型准确度指标

- 每种模型类型的标准图表(例如,ROC 曲线、PR 曲线和二元分类器的混淆矩阵)

- 模型的完整学习参数

- 模型可视化的汇总统计

用户可以通过 Web UI 和以编程方式通过 API 轻松获得这些信息,既可用于检查单个模型的详细信息,也可用于比较一个或多个模型。

Model accuracy report

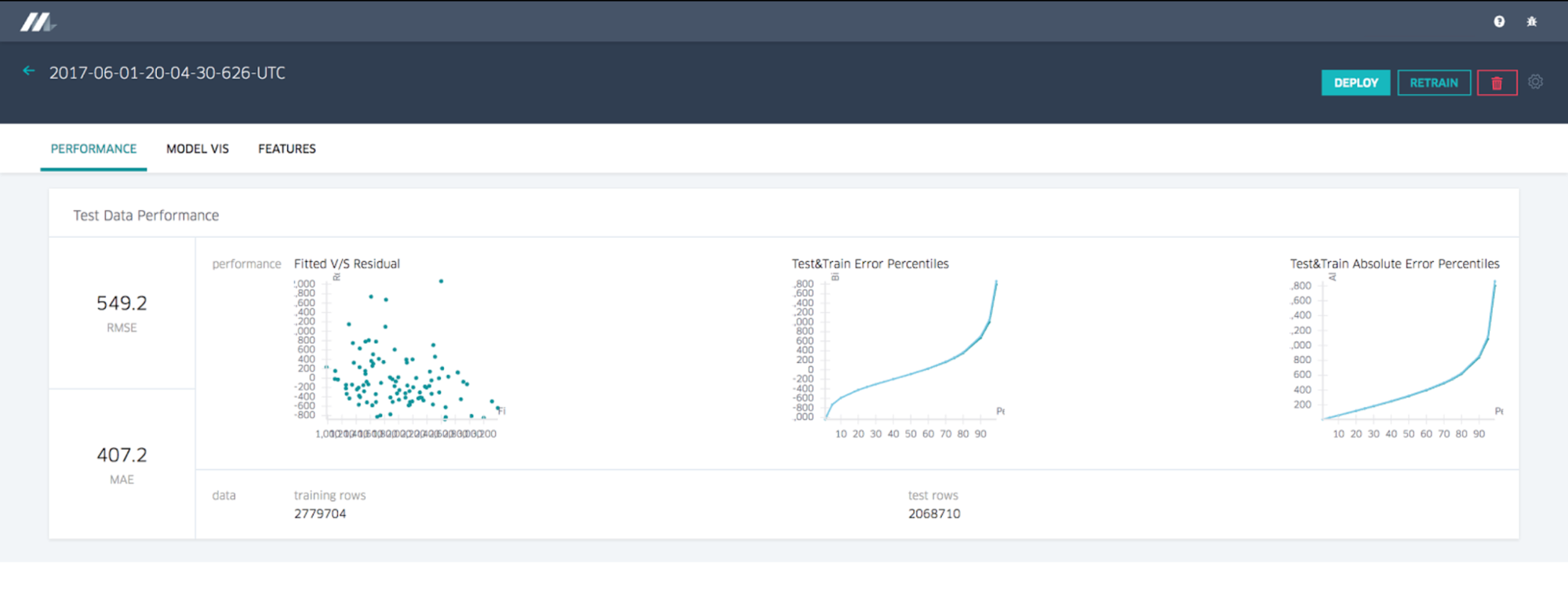

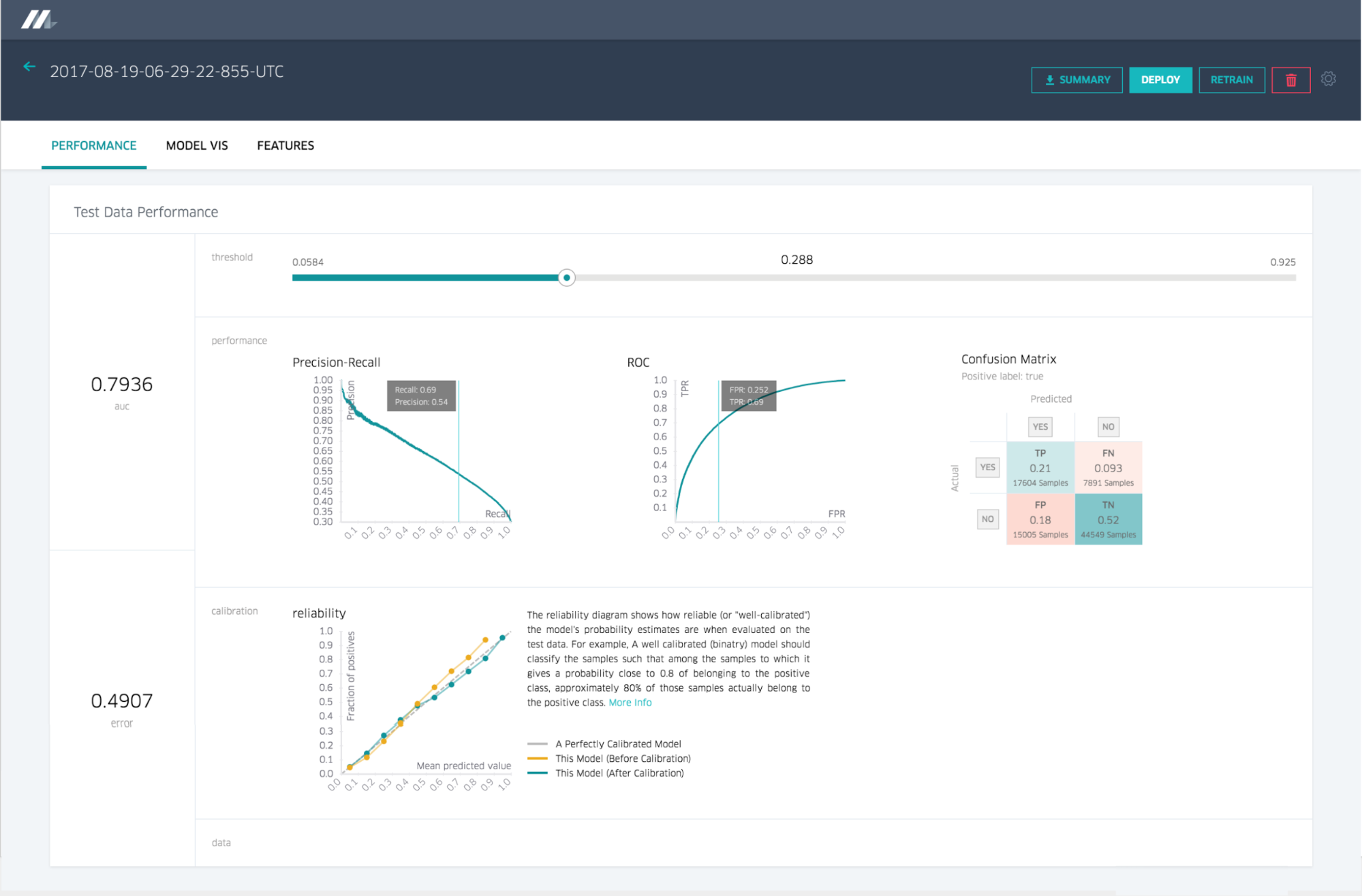

The model accuracy report for a regression model shows standard accuracy metrics and charts. Classification models would display a different set, as depicted below in Figures 4 and 5:

回归模型的模型准确度报告显示标准准确度指标和图表。 分类模型将显示不同的集合,如下图 4 和图 5 所示:

Figure 4: Regression model reports show regression-related performance metrics.

Figure 4: Regression model reports show regression-related performance metrics.

Figure 5: Binary classification performance reports show classification-related performance metrics.

Figure 5: Binary classification performance reports show classification-related performance metrics.

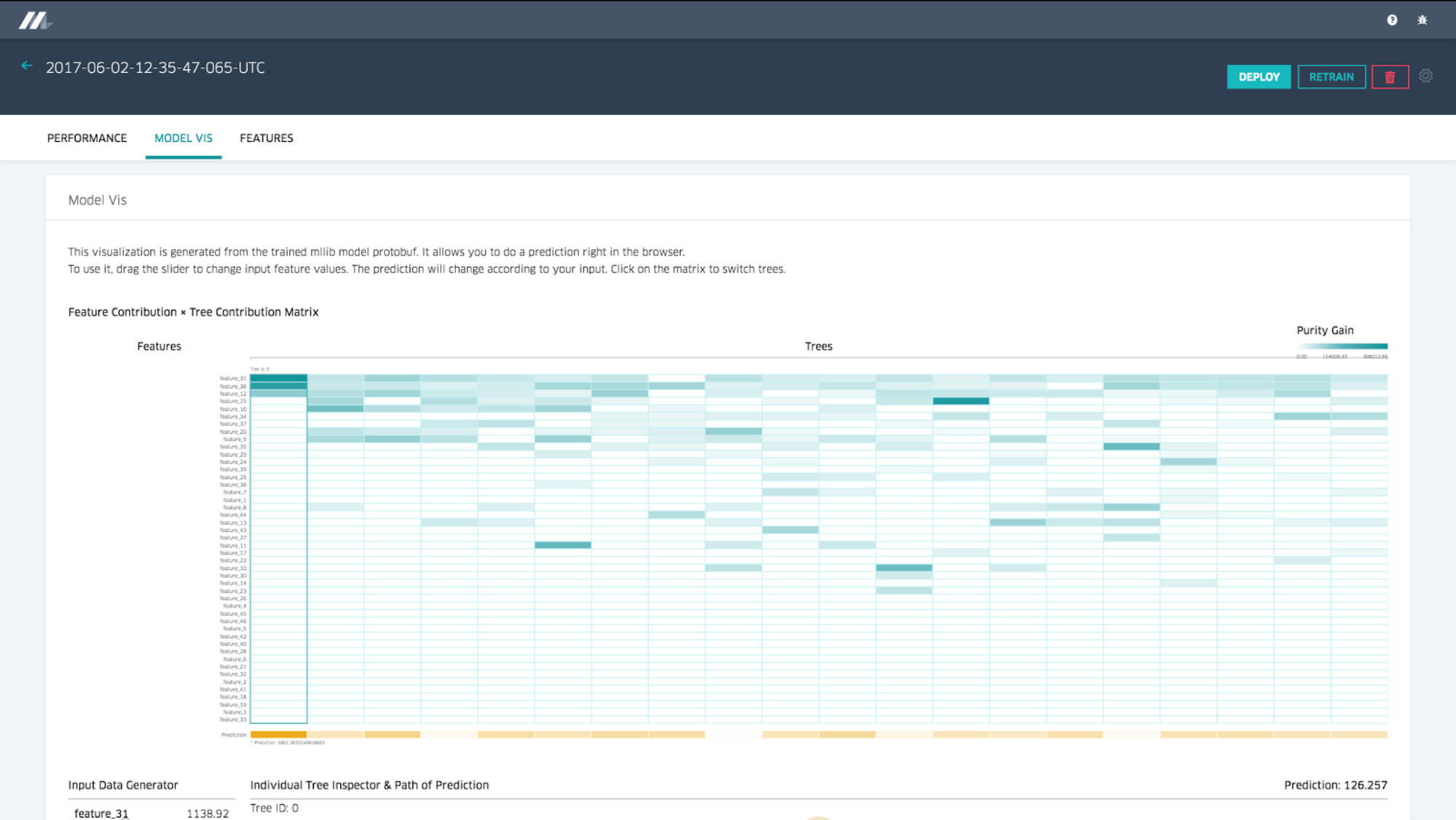

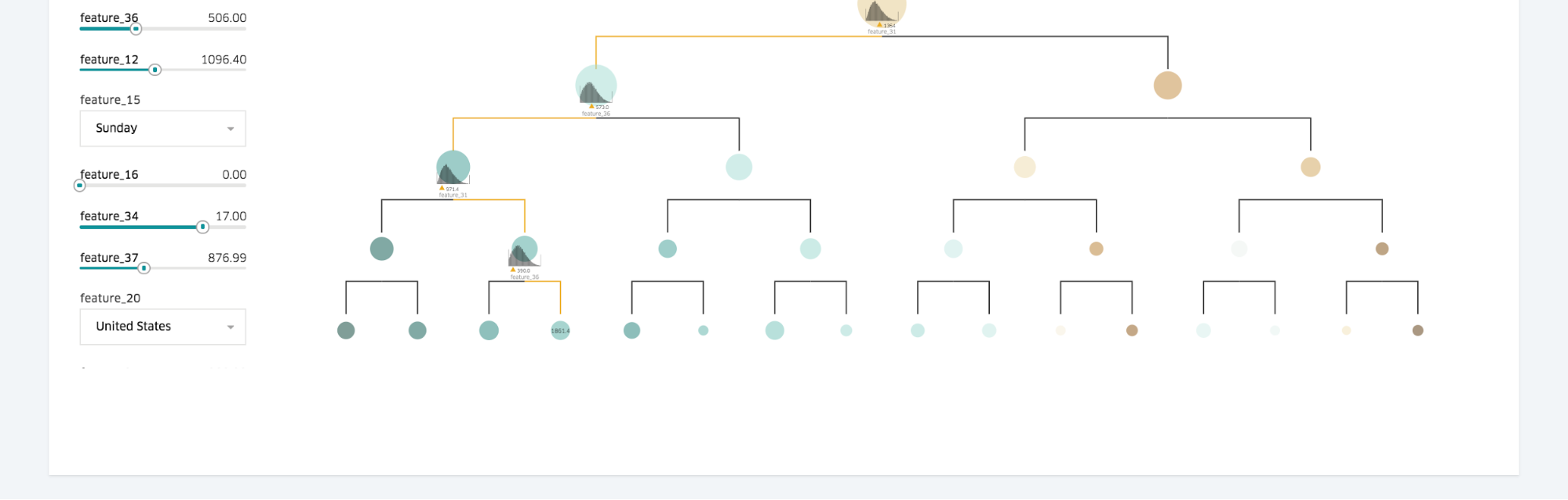

Decision tree visualization

For important model types, we provide sophisticated visualization tools to help modelers understand why a model behaves as it does, as well as to help debug it if necessary. In the case of decision tree models, we let the user browse through each of the individual trees to see their relative importance to the overall model, their split points, the importance of each feature to a particular tree, and the distribution of data at each split, among other variables. The user can specify feature values and the visualization will depict the triggered paths down the decision trees, the prediction per tree, and the overall prediction for the model, as pictured in Figure 6 below:

对于重要的模型类型,我们提供复杂的可视化工具来帮助建模者理解模型行为的原因,并在必要时帮助调试它。 在决策树模型的情况下,我们让用户浏览每棵树以查看它们对整个模型的相对重要性、它们的分割点、每个特征对特定树的重要性以及每个树的数据分布 分裂等变量。 用户可以指定特征值,可视化将描绘决策树的触发路径、每棵树的预测以及模型的整体预测,如下图 6 所示:

Figure 6: Tree models can be explored with powerful tree visualizations.

Figure 6: Tree models can be explored with powerful tree visualizations.

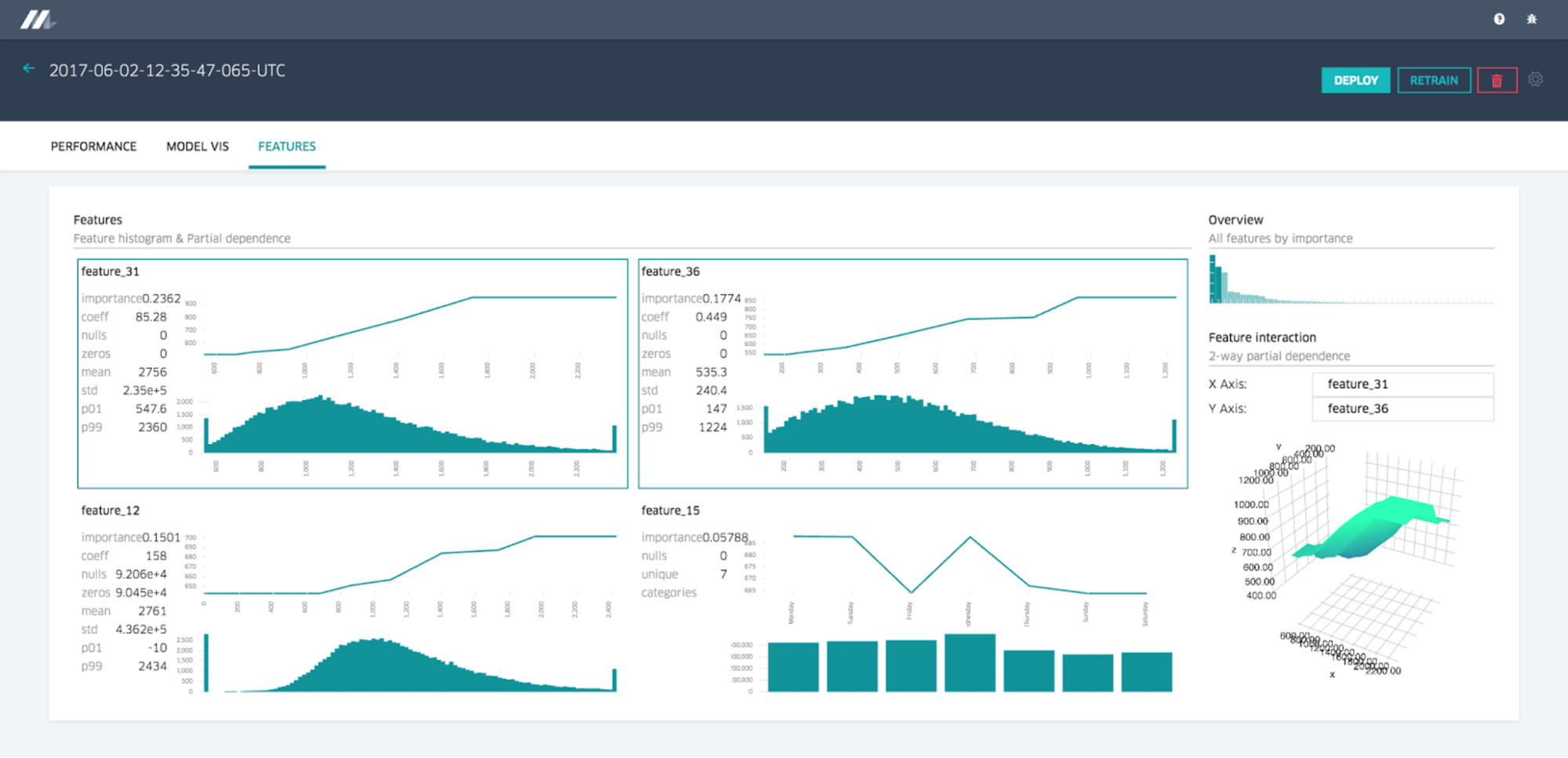

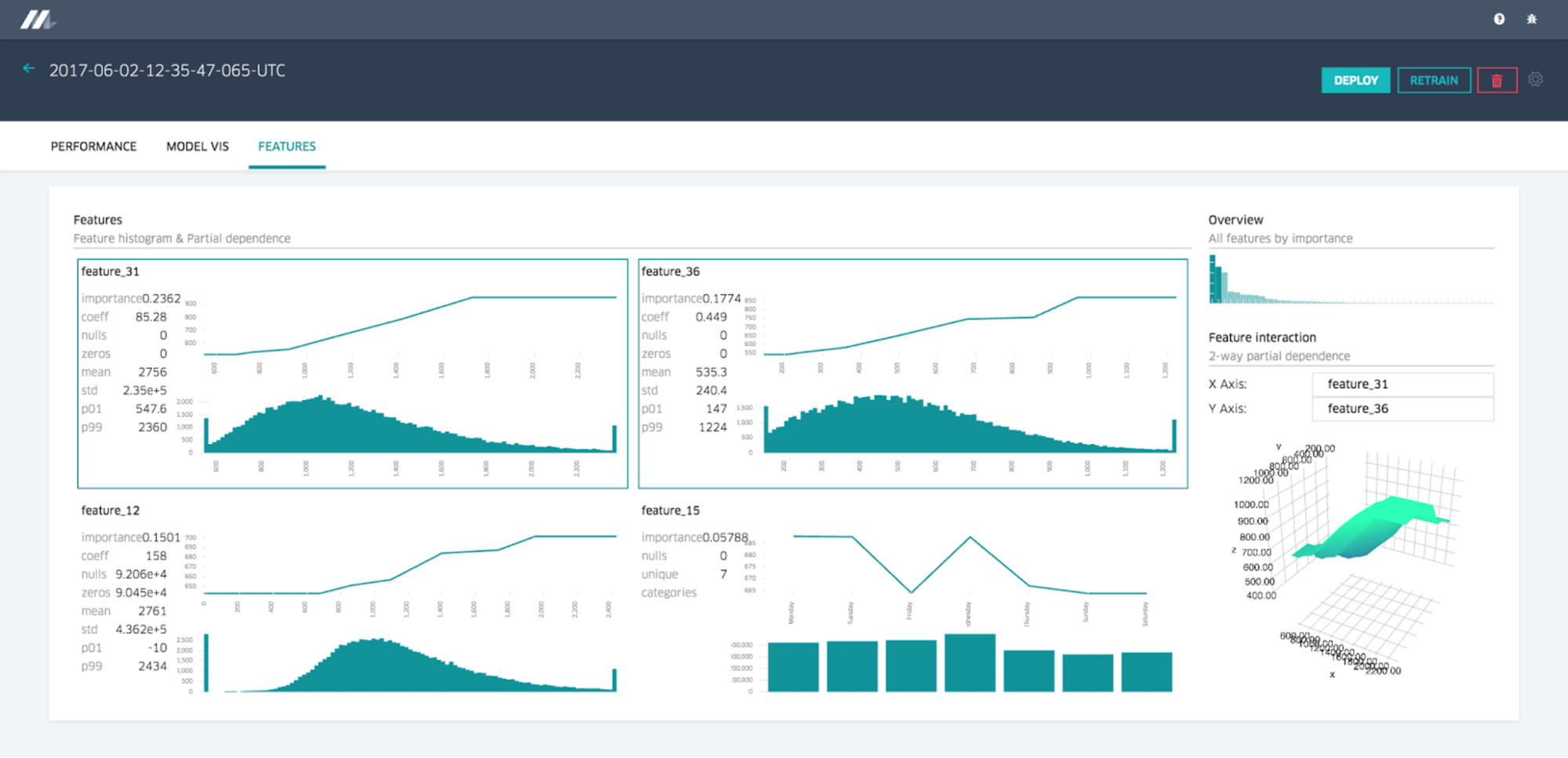

Feature report

Michelangelo provides a feature report that shows each feature in order of importance to the model along with partial dependence plots and distribution histograms. Selecting two features lets the user understand the feature interactions as a two-way partial dependence diagram, as showcased below:

Michelangelo 提供了一份特征报告,其中按对模型的重要性顺序显示每个特征以及部分依赖图和分布直方图。 选择两个特征可以让用户将特征交互理解为双向部分依赖图,如下所示:

Figure 7: Features, their impact on the model, and their interactions can be explored though a feature report.

Figure 7: Features, their impact on the model, and their interactions can be explored though a feature report.

Deploy models

Michelangelo has end-to-end support for managing model deployment via the UI or API and three modes in which a model can be deployed:

- Offline deployment. The model is deployed to an offline container and run in a Spark job to generate batch predictions either on demand or on a repeating schedule.

- Online deployment. The model is deployed to an online prediction service cluster (generally containing hundreds of machines behind a load balancer) where clients can send individual or batched prediction requests as network RPC calls.

- Library deployment. We intend to launch a model that is deployed to a serving container that is embedded as a library in another service and invoked via a Java API. (It is not shown in Figure 8, below, but works similarly to online deployment).

Michelangelo 对通过 UI 或 API 管理模型部署提供端到端的支持,以及可以部署模型的三种模式:

- 离线部署。 该模型部署到离线容器并在 Spark 作业中运行,以按需或按重复计划生成批量预测。

- 在线部署。 该模型部署到在线预测服务集群(通常包含负载均衡器后面的数百台机器),客户端可以在其中发送单个或批量预测请求作为网络 RPC 调用。

- 库部署。 我们打算启动一个模型,该模型部署到服务容器中,该容器作为库嵌入到另一个服务中并通过 Java API 调用。 (它未在下面的图 8 中显示,但其工作方式与在线部署类似)。

Figure 8: Models from the model repository are deployed to online and offline containers for serving.

Figure 8: Models from the model repository are deployed to online and offline containers for serving.

In all cases, the required model artifacts (metadata files, model parameter files, and compiled DSL expressions) are packaged in a ZIP archive and copied to the relevant hosts across Uber’s data centers using our standard code deployment infrastructure. The prediction containers automatically load the new models from disk and start handling prediction requests.

在所有情况下,所需的模型工件(元数据文件、模型参数文件和编译后的 DSL 表达式)都打包在 ZIP 存档中,并使用我们的标准代码部署基础架构复制到 Uber 数据中心的相关主机。 预测容器会自动从磁盘加载新模型并开始处理预测请求。

Many teams have automation scripts to schedule regular model retraining and deployment via Michelangelo’s API. In the case of the UberEATS delivery time models, training and deployment are triggered manually by data scientists and engineers through the web UI.

许多团队都有自动化脚本,可以通过 Michelangelo 的 API 来安排定期的模型重新训练和部署。 对于 UberEATS 交付时间模型,数据科学家和工程师通过 Web UI 手动触发训练和部署。

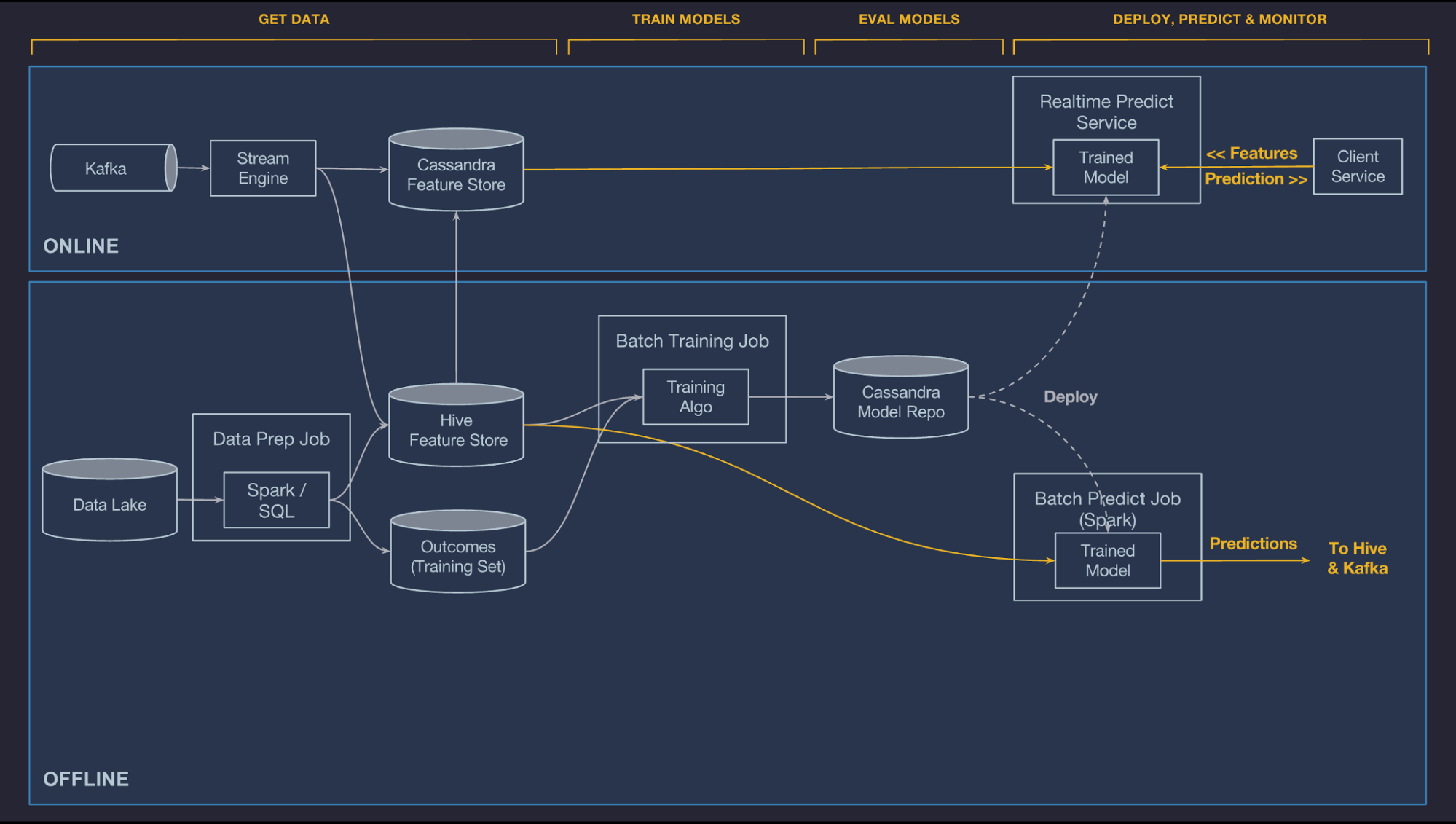

Make predictions

Once models are deployed and loaded by the serving container, they are used to make predictions based on feature data loaded from a data pipeline or directly from a client service. The raw features are passed through the compiled DSL expressions which can modify the raw features and/or fetch additional features from the Feature Store. The final feature vector is constructed and passed to the model for scoring. In the case of online models, the prediction is returned to the client service over the network. In the case of offline models, the predictions are written back to Hive where they can be consumed by downstream batch jobs or accessed by users directly through SQL-based query tools, as depicted below:

一旦模型被服务容器部署和加载,它们将用于根据从数据管道或直接从客户端服务加载的特征数据进行预测。 原始特征通过编译后的 DSL 表达式传递,该表达式可以修改原始特征和/或从特征存储中获取附加特征。 构建最终的特征向量并将其传递给模型进行评分。 在在线模型的情况下,预测通过网络返回给客户端服务。 在离线模型的情况下,预测被写回 Hive,在那里它们可以被下游批处理作业使用或由用户直接通过基于 SQL 的查询工具访问,如下所示:

Figure 9: Online and offline prediction services use sets of feature vectors to generate predictions.

Figure 9: Online and offline prediction services use sets of feature vectors to generate predictions.

Referencing models

More than one model can be deployed at the same time to a given serving container. This allows safe transitions from old models to new models and side-by-side A/B testing of models. At serving time, a model is identified by its UUID and an optional tag (or alias) that is specified during deployment. In the case of an online model, the client service sends the feature vector along with the model UUID or model tag that it wants to use; in the case of a tag, the container will generate the prediction using the model most recently deployed to that tag. In the case of batch models, all deployed models are used to score each batch data set and the prediction records contain the model UUID and optional tag so that consumers can filter as appropriate.

可以将多个模型同时部署到给定的服务容器。这允许从旧模型到新模型的安全转换以及模型的并行 A/B 测试。在服务时,模型由其 UUID 和部署期间指定的可选标签(或别名)标识。在在线模型的情况下,客户端服务将特征向量连同它想要使用的模型 UUID 或模型标签一起发送;对于标签,容器将使用最近部署到该标签的模型生成预测。在批处理模型的情况下,所有部署的模型都用于对每个批处理数据集进行评分,并且预测记录包含模型 UUID 和可选标签,以便消费者可以进行适当的过滤。

If both models have the same signature (i.e. expect the same set of features) when deploying a new model to replace an old model, users can deploy the new model to the same tag as the old model and the container will start using the new model immediately. This allows customers to update their models without requiring a change in their client code. Users can also deploy the new model using just its UUID and then modify a configuration in the client or intermediate service to gradually switch traffic from the old model UUID to the new one.

如果在部署新模型替换旧模型时两个模型具有相同的签名(即期望相同的特征集),则用户可以将新模型部署到与旧模型相同的标签,容器将开始使用新模型立即地。这允许客户更新他们的模型而无需更改他们的客户端代码。用户还可以仅使用其 UUID 部署新模型,然后在客户端或中间服务中修改配置,以逐渐将流量从旧模型 UUID 切换到新模型。

For A/B testing of models, users can simply deploy competing models either via UUIDs or tags and then use Uber’s experimentation framework from within the client service to send portions of the traffic to each model and track performance metrics.

对于模型的 A/B 测试,用户可以简单地通过 UUID 或标签部署竞争模型,然后在客户端服务中使用 Uber 的实验框架将部分流量发送到每个模型并跟踪性能指标。

Scale and latency

Since machine learning models are stateless and share nothing, they are trivial to scale out, both in online and offline serving modes. In the case of online models, we can simply add more hosts to the prediction service cluster and let the load balancer spread the load. In the case of offline predictions, we can add more Spark executors and let Spark manage the parallelism.

由于机器学习模型是无状态的并且不共享任何内容,因此无论是在线还是离线服务模式,它们都可以轻松扩展。 在在线模型的情况下,我们可以简单地向预测服务集群添加更多主机,并让负载均衡器分散负载。 在离线预测的情况下,我们可以添加更多的 Spark 执行器,让 Spark 管理并行性。

Online serving latency depends on model type and complexity and whether or not the model requires features from the Cassandra feature store. In the case of a model that does not need features from Cassandra, we typically see P95 latency of less than 5 milliseconds (ms). In the case of models that do require features from Cassandra, we typically see P95 latency of less than 10ms. The highest traffic models right now are serving more than 250,000 predictions per second.

在线服务延迟取决于模型类型和复杂性,以及模型是否需要来自 Cassandra 特征存储的特征。 对于不需要 Cassandra 特征的模型,我们通常会看到 P95 延迟小于 5 毫秒 (ms)。 对于确实需要 Cassandra 特征的模型,我们通常会看到 P95 延迟小于 10 毫秒。 目前最高流量的模型每秒可提供超过 250,000 个预测。

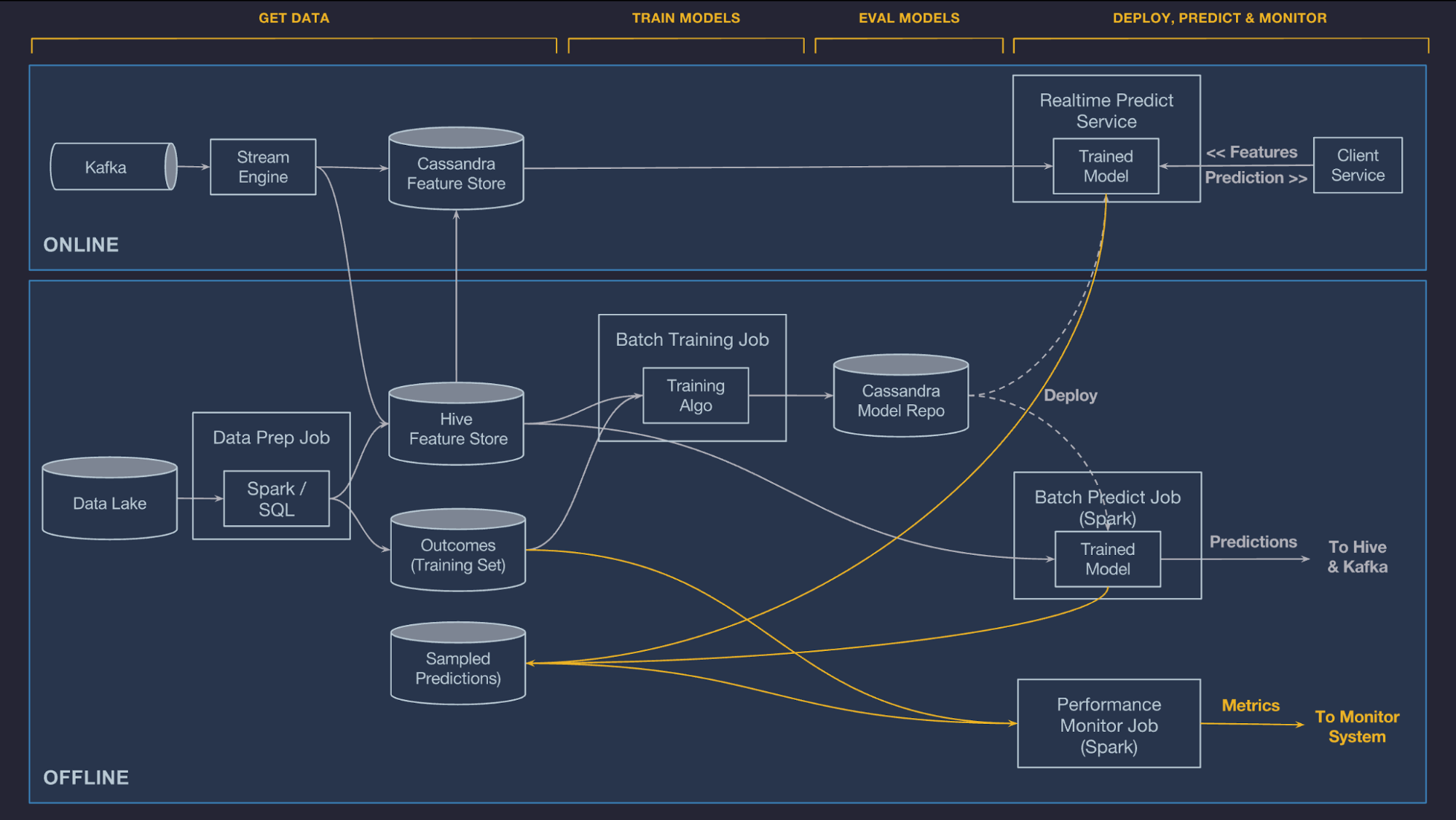

Monitor predictions

When a model is trained and evaluated, historical data is always used. To make sure that a model is working well into the future, it is critical to monitor its predictions so as to ensure that the data pipelines are continuing to send accurate data and that production environment has not changed such that the model is no longer accurate.

在训练和评估模型时,始终使用历史数据。 为了确保模型在未来运行良好,监控其预测至关重要,以确保数据管道继续发送准确的数据,并且生产环境没有发生变化,从而导致模型不再准确。

To address this, Michelangelo can automatically log and optionally hold back a percentage of the predictions that it makes and then later join those predictions to the observed outcomes (or labels) generated by the data pipeline. With this information, we can generate ongoing, live measurements of model accuracy. In the case of a regression model, we publish R-squared/coefficient of determination, root mean square logarithmic error (RMSLE), root mean square error (RMSE), and mean absolute error metrics to Uber’s time series monitoring systems so that users can analyze charts over time and set threshold alerts, as depicted below:

为了解决这个问题,米开朗基罗可以自动记录并选择性地保留其做出的预测的百分比,然后将这些预测与数据管道生成的观察结果(或标签)结合起来。 有了这些信息,我们就可以生成模型准确性的持续实时测量结果。 在回归模型的情况下,我们将 R 平方/确定系数、均方根对数误差 (RMSLE)、均方根误差 (RMSE) 和平均绝对误差指标发布到 Uber 的时间序列监控系统,以便用户可以 随着时间的推移分析图表并设置阈值警报,如下所示:

Figure 10: Predictions are sampled and compared to observed outcomes to generate model accuracy metrics.

Figure 10: Predictions are sampled and compared to observed outcomes to generate model accuracy metrics.

Management plane, API, and web UI

The last important piece of the system is an API tier. This is the brains of the system. It consists of a management application that serves the web UI and network API and integrations with Uber’s system monitoring and alerting infrastructure. This tier also houses the workflow system that is used to orchestrate the batch data pipelines, training jobs, batch prediction jobs, and the deployment of models both to batch and online containers.

系统的最后一个重要部分是 API 层。 这是系统的大脑。 它由一个管理应用程序组成,该应用程序为 Web UI 和网络 API 提供服务,并与 Uber 的系统监控和警报基础设施集成。 该层还包含用于编排批处理数据管道、训练作业、批处理预测作业以及将模型部署到批处理和在线容器的工作流系统。

Users of Michelangelo interact directly with these components through the web UI, the REST API, and the monitoring and alerting tools.

Michelangelo 的用户通过 Web UI、REST API 以及监控和警报工具直接与这些组件交互。

Building on the Michelangelo platform

In the coming months, we plan to continue scaling and hardening the existing system to support both the growth of our set of customer teams and Uber’s business overall. As the platform layers mature, we plan to invest in higher level tools and services to drive democratization of machine learning and better support the needs of our business:

- AutoML. This will be a system for automatically searching and discovering model configurations (algorithm, feature sets, hyper-parameter values, etc.) that result in the best performing models for given modeling problems. The system would also automatically build the production data pipelines to generate the features and labels needed to power the models. We have addressed big pieces of this already with our Feature Store, our unified offline and online data pipelines, and hyper-parameter search feature. We plan to accelerate our earlier data science work through AutoML. The system would allow data scientists to specify a set of labels and an objective function, and then would make the most privacy-and security-aware use of Uber’s data to find the best model for the problem. The goal is to amplify data scientist productivity with smart tools that make their job easier.

- Model visualization. Understanding and debugging models is increasingly important, especially for deep learning. While we have made some important first steps with visualization tools for tree-based models, much more needs to be done to enable data scientists to understand, debug, and tune their models and for users to trust the results.

- Online learning. Most of Uber’s machine learning models directly affect the Uber product in real time. This means they operate in the complex and ever-changing environment of moving things in the physical world. To keep our models accurate as this environment changes, our models need to change with it. Today, teams are regularly retraining their models in Michelangelo. A full platform solution to this use case involves easily updateable model types, faster training and evaluation architecture and pipelines, automated model validation and deployment, and sophisticated monitoring and alerting systems. Though a big project, early results suggest substantial potential gains from doing online learning right.

- Distributed deep learning. An increasing number of Uber’s machine learning systems are implementing deep learning technologies. The user workflow of defining and iterating on deep learning models is sufficiently different from the standard workflow such that it needs unique platform support. Deep learning use cases typically handle a larger quantity of data, and different hardware requirements (i.e. GPUs) motivate further investments into distributed learning and a tighter integration with a flexible resource management stack.

在接下来的几个月里,我们计划继续扩展和强化现有系统,以支持我们的客户团队和 Uber 整体业务的增长。随着平台层的成熟,我们计划投资更高级别的工具和服务,以推动机器学习的民主化并更好地支持我们的业务需求:

- 自动机器学习。这将是一个系统,用于自动搜索和发现模型配置(算法、特征集、超参数值等),从而为给定的建模问题产生最佳性能的模型。该系统还将自动构建生产数据管道,以生成为模型提供动力所需的特征和标签。我们已经通过我们的特征存储、我们统一的离线和在线数据管道以及超参数搜索特征解决了这一问题。我们计划通过 AutoML 加速我们早期的数据科学工作。该系统将允许数据科学家指定一组标签和一个目标函数,然后将最注重隐私和安全的 Uber 数据用于找到解决问题的最佳模型。目标是通过智能工具提高数据科学家的工作效率,使他们的工作更轻松。

- 模型可视化。 理解和调试模型越来越重要,尤其是对于深度学习。虽然我们已经使用基于树的模型的可视化工具迈出了一些重要的第一步,但还需要做更多的工作来使数据科学家能够理解、调试和调整他们的模型,并使用户相信结果。

- 在线学习。 Uber 的大部分机器学习模型都会实时直接影响 Uber 产品。这意味着它们在物理世界中移动事物的复杂且不断变化的环境中运行。为了在这种环境变化时保持我们的模型准确,我们的模型需要随之改变。今天,团队定期在米开朗基罗重新训练他们的模型。此用例的完整平台解决方案涉及可轻松更新的模型类型、更快的训练和评估架构和管道、自动模型验证和部署以及复杂的监控和警报系统。虽然是一个大项目,但早期结果表明,正确地进行在线学习可以带来巨大的潜在收益。

- 分布式深度学习。越来越多的 Uber 机器学习系统正在实施深度学习技术。定义和迭代深度学习模型的用户工作流程与标准工作流程有很大不同,因此需要独特的平台支持。深度学习用例通常处理大量数据,不同的硬件要求(即 GPU)促使进一步投资于分布式学习以及与灵活资源管理堆栈的更紧密集成。

Major Updates

来源

- How Uber’s Michelangelo Contributed To The ML World

- Michelangelo PyML: Introducing Uber’s Platform for Rapid Python ML Model Development

- Evolving Michelangelo Model Representation for Flexibility at Scale

In 2017, Michelangelo was launched with a monolithic architecture that managed tightly coupled workflows and Spark jobs for training and serving. Michelangelo had specific pipeline definitions for each supported model type. Offline serving was handled through Spark, and online serving was handled using custom APIs.

In 2018, Uber extended Michelangelo through PyML to make it easier for Python users to train and deploy their models. These models can contain arbitrary use code and use any Python package or native Linux libraries. It allows data scientists to locally run an identical copy of a model in real-time experiments and large-scale parallelised offline prediction works.

The functionalities are accessible through simple Python SDK and can be leveraged directly through development environments such as Jupyter notebooks without switching between separate applications. Since this solution leverages several open-source components, it can be easily transferred to other ML platforms and model serving systems.

In 2019, the team at Uber decided to update Michelangelo model representation for flexibility at scale. The original model supported only a subset of Spark MLlib models with in-house custom model serialisation and representation. This prevented customers from experimenting with complex model pipelines. The team then decided to develop Michelangelo’s use of Spark MLlib in areas such as model representation, persistence, and online serving.